Roc Toolkit 0.3 is out!

What’s new

Roc Toolkit implements real-time streaming over unreliable networks like Internet and Wi-Fi. It works on Linux and macOS and provides C library, CLI tools, modules for PulseAudio and PipeWire, and Android app.

The 0.3 release was focused on:

- reducing minimum allowed latency

- running in CPU-constrained environments

- extending C API

Full changelog is available here.

Latency

To allow lower latencies, two changes were made:

-

All pipeline elements were reworked to accept arbitrary frame sizes. Earlier, some of them were using frames of fixed size which put limitations on the minimum possible latency.

-

A new clock synchronization profile was implemented, suitable for low latencies. Clock synchronization algorithm is responsible for maintaining the latency close to the target value and compensating clock drift between sender and receiver.

Existing profile, now called “gradual”, is very smooth and tolerates high network jitter, but can cause (slow) latency oscillations, putting limitations on the minimum latency. The new profile, called “responsive”, is designed for environments with low jitter and latency. It reacts quicker and does not cause big oscillations.

By default, profile is selected automatically based on the requested latency.

In my tests on Ethernet, I was able to achieve latency of 7 ms for streaming, plus 12 ms for two USB sound cards (cards that I used for testing require 6 ms for input and output).

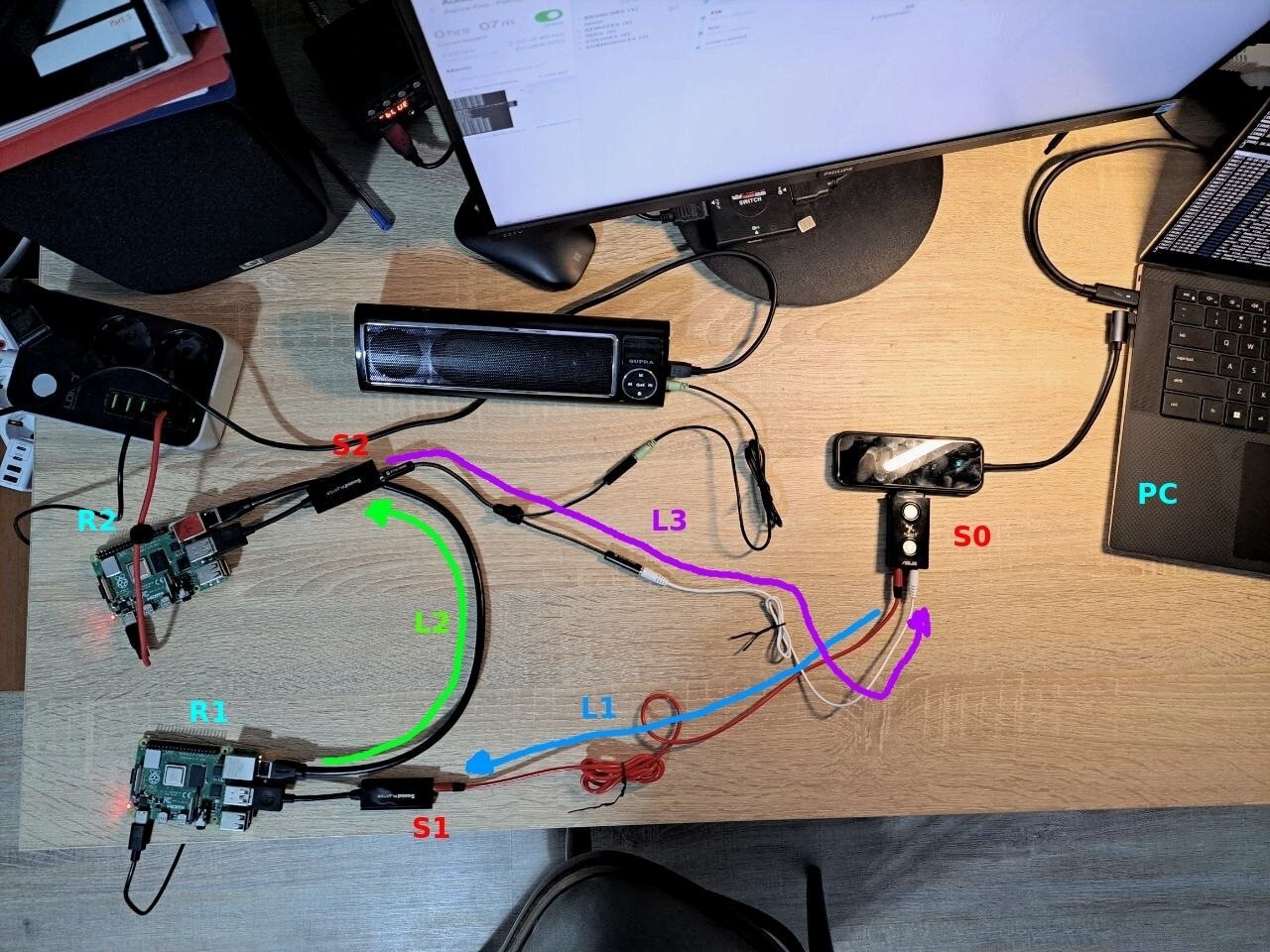

Here is the test bench that I used to measure latency (I already posted it earlier on mastodon):

Legend:

S0,S1,S2- sound cardsL1,L3- jack cablesL2- Ethernet cablePC- laptop with signal-estimator, which writes audio toS0output, reads fromS0input, and measures latencyR1- Raspberry Pi with Roc sender, which reads audio fromS1input and sends it over networkR2- Raspberry Pi with Roc receiver, which reads audio from network and writes it toS2output

(There is also a jack splitter that duplicates sound from L3 to speakers).

C API

A huge part of this release was improvements in the C API.

To name a few:

-

A new networkless version of

roc_sender/roc_receiver, calledroc_sender_encoder/roc_receiver_decoder.In this API, Roc doesn’t deliver packets by itself; the user is responsible for delivery and can use any network transport. In particular, you can use this API to integrate Roc with third-party network transports like libjuice.

Using Roc with libjuice allows combining features from both. Roc implements encoding, loss repair (FEC), jitter buffer, clock drift compensation, format conversions, and libjuice provides P2P connectivity with NAT traversal.

(Someday Roc will support ICE natively, but for now there is no specific estimate for that).

-

Support removing slots on the fly.

“Slots” represent connected peers, so adding / removing slots is needed when peers enter / leave session dynamically. Earlier it was possible to add slots, but not to remove them.

-

Initial version of metrics API.

Allows to query counters from sender and receiver. Currently, metrics include current jitter buffer size and estimated end-to-end latency.

-

Overhaul of frame and encoding configuration.

-

Support for mono, stereo, and multitrack channel layouts with up to 1024 channels.

-

API to register custom network (packet) encodings.

For the full list, see changelog.

Speexdec resampler

Roc uses resampling for two purposes: to convert between network and sound card sample rates, and for clock drift compensation.

Before 0.3, Roc had two resampler backends: “builtin” (high quality, high scaling precision, and higher CPU usage), and “speex” (high quality, worse scaling precision, and medium CPU usage).

New “speexdec” backend combines speex for base rate conversion and old bad decimation for the dynamic part needed to compensate clock drift. Since the dynamic part of the scaling factor is very close to 1.0, the decimation usually affects only 5-20 samples/second, which is quite tolerable.

It may be useful in two cases:

-

When CPU resources are very limited, and you can’t use higher-quality “builtin” and “speex” resamplers, you can use “speexdec” and configure network rate to be the same as sound card rate. In this case “speexdec” just performs decimation, which is really cheap.

-

When CPU resources are moderately limited, and you can’t use “builtin” resampler, you can use either “speex” or “speexdec”. “speex” gives higher quality, but worse scaling precision, and “speexdec” allows to sacrifice some quality for better scaling precision. Better scaling precision means lower latency oscillations, which means lower minimum allowed latency.

In most cases, when CPU is not very limited, and / or latency is not very low, “builtin” and “speex” resamplers will work fine. “speexdec” is just yet another option in user’s toolbox to cover less typical scenarios.

Original motivation for developing “speexdec” was running multi-session cloud instances on cheap ARM virtual machines billed by processor time.

See more details in documentation.

Hacktoberfest

This hacktoberfest went great for Roc!

We received a dozen good patches, including lock-free PRNG (eliminating one of the few remaining priority inversion traps), improved memory protection for pools, and various refactoring that we’ve been putting off for long.

What truly matters to me is that several people continue contributing after hacktoberfest too.

Thank you so much!

If you would like to join the project too, you’re welcome and please take a look at the contribution guide. We always have plenty of detailed help-wanted issues for newcomers.

What’s next

We already started work in the following directions:

- computing end-to-end latency (overall delay from sender to receiver, including I/O and network)

- adaptive latency mode (dynamically adjust jitter buffer size depending on network conditions)

- sender-side clock drift compensation (as an alternative to receiver-side, for cases when receiver has limited CPU resources, but sender doesn’t)

- surround sound support and automatic mapping between different surround layouts

As usual, you can follow updates on the project mailing list or on my mastodon.